When Dana Burgess got his start on vision systems working at a Grand Rapids automotive supplier in the early 2000s, machine vision was a different game. Starting in quality control, placing cameras was the most common response to quality problems reported by their customers. It was the best way for the manufacturer to implement poka–yoke (translated from the Japanese for error-proofing).

The simplest cameras would scan barcodes. And in more technical applications, these cameras, which typically captured still, 2D black-and-white images, were designed to inspect one specific aspect of product quality (e.g., “is that gasket installed in the right location of this part?”).

They required near-perfect lighting, and the object for inspection needed to have exactly the same orientation, shape and distance from the sensor for every image. Pre-programmed onboard software would return a true/false or 1/0 response, which might trigger an “accept” or “reject” message for the machine or operator.

Introducing Advanced Machine Vision Software

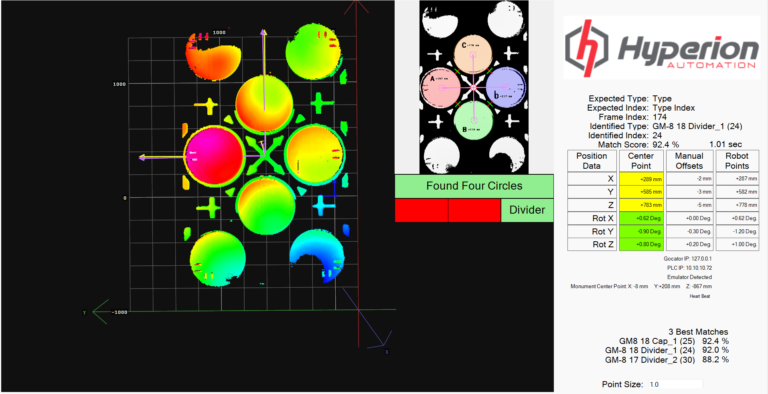

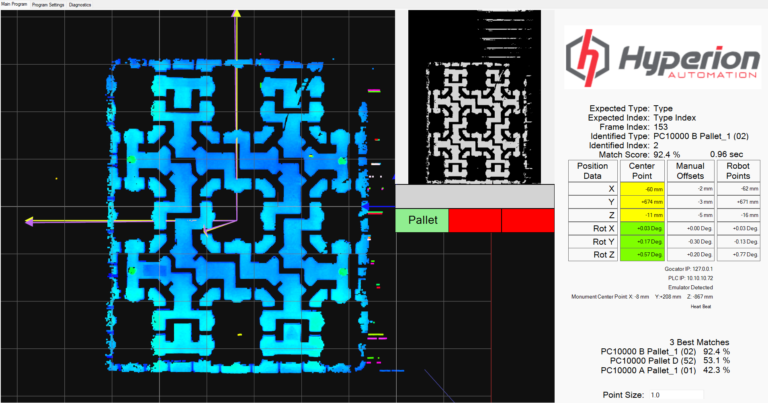

A lot has changed in quality assurance (QA) over the past 20 years. Now Dana works with the next generation of vision systems. Using Aurora™ Vision (formerly Adaptive Vision) software, which is hardware-agnostic, Dana can pull datasets from multiple different types of sensors or brands of camera including:

- Traditional 2D vision systems

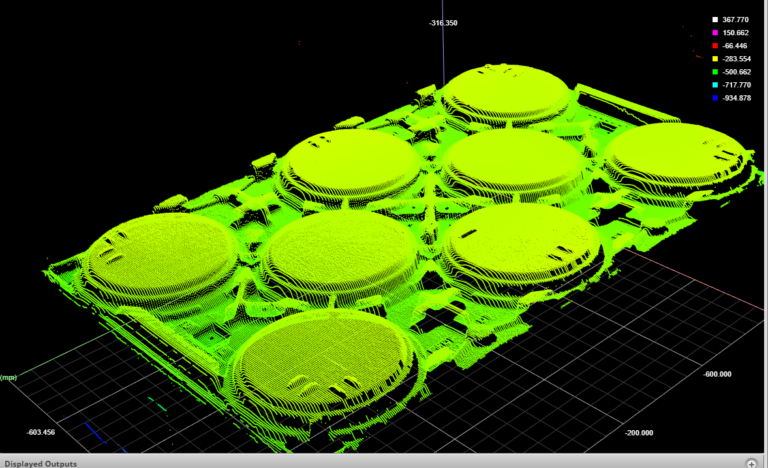

- 3D laser sensors like LMI’s Gocator

- Color detection cameras

Unlike a barcode scan or a single image, processing information from these sources gives you a full understanding of the physical state of your product. Next generation machine-vision solutions let you:

- Run multiple, complex assessments

- Process one or more images simultaneously

- Run multiple image manipulations, including crops, translations, luminosity adjustments, pattern identification or advanced filters in whatever order you need.

- Clean up image noise using reflection filters

“These days, traditional 2D systems are a lot better, but they still depend on reducing the variables. It’s all about lighting,” Dana explained. “The shape, size or color of the product matters. They’re so sensitive, that we’ve seen instances where an operator was wearing a bright shirt, and that would throw off the system. Adaptive vision solves for these types of problems.”

Robotic Guidance Systems

Machine vision breakthroughs are also changing the way we use robots.

“Robots are extremely precise, meaning they’re great at performing the exact same movements at the exact same location every time. But the ability to repeat precise motions is a robot’s greatest strength but also its greatest weakness,” Dana explains. “The problem is that the world isn’t built with that. It’s tough to control your entire process down to one tenth of a millimeter.”

There are other complicating factors. Human error, changes in operator styles, and even your plant floor’s environmental conditions like temperature and humidity can affect your product shape, position or orientation. Without eyes or a brain, a robot is going to have a big problem when it goes to interact with a product that doesn’t present the same way every time.

Hyperion Automation uses adaptive vision systems to make robot applications more flexible. If a camera gives a robot eyes, then machine vision software gives it a brain. In short, it lets robots who tend to be coldly systematic, think and see more like a human, especially in scenarios where you have variations or nonuniform products.

“We’ve gotten good at this because we’re pessimistic by nature,” joked Dana. “Coming from a maintenance background, I’ve had enough experience with things going wrong to learn that you need to be pragmatic—if your plan assumes that nothing will change and everything will go perfectly every time anyone uses it, you’re going to run into trouble.” When we approach a product, we assume that little things are going to go wrong. You can design a system that will simply reject the part if it’s 1mm off or twisted by 12° coming down the belt—but we anticipate these small incongruencies by design, and use smart machine vision software to handle the problem the way a human would: by adapting.

Conditional Logic for Custom Workflows

One of the best features about advanced systems is that many of them offer the option for conditional logic. Complex algorithms let you make decisions about what inspections to make based on previous data.

“So maybe you use the first few image operations to decide what product is coming down the line. Then, maybe you can determine the model, and after that the color. It’s almost like a decision flowchart built into the software logic,” said Dana when discussing Aurora Vision.

The reason this matters is that the machine doesn’t need to know in advance what it’s looking at. It can figure out using previous information and then determine which inspections to make or how to make your robots respond.

“In short, it can tell you what it sees and whether or not it’s a good part. It’s a subtle difference, but when you’ve got a high-stakes product line with margin for error, it matters to have a system in place that can run those checks efficiently.”

Full Integration

Advanced machine-vision software also create the opportunity for true full-systems integration. Whereas some traditional vision systems may be isolated to a single cell, advanced software install into a PC environment at your panel, allowing you to:

- Integrate with your programmable logic controllers (PLC)s

- Communicate directly with your enterprise resource planning (ERP) software

- Update your database with real time information

- Run reports and track errors logs

- Provide images associated with logs to assist troubleshooting

- Give your production or QA teams the feedback they need to fix problems and optimize your floor.

Limitations

Like any software, machine vision technology is only as good as the data you feed it.

“If you need to check something very precise,” Dana explained, “say, for example, like the quality of the threading on a bolt, you’re still going to need a great camera to feed good data into the system. Just using a 3D laser might give you enough data to see what kind of bolt it is, and even the pitch of the thread, but the software isn’t magic. It can’t create data that isn’t there.”

When is something like Aurora Vision the right choice for my application?

Advanced machine-vision software is a gamechanger, but it’s not always the right choice for every application.

| Advanced Machine Vision when you have: | Traditional 2D Vision System when you have: | |

| Uniformity | Variety | Uniformity |

| Number of Images | Multiple Images | One Image |

| Complexity of Inspection | Complex Inspections | Simple Inspections |

| Precision | Most precision levels | Highly-precise applications |

| Number of Products on the Line | Multiple products | Only one product |

| Position/Orientation | Any position, orientation or distance (within reason) | Consistent position, orientation and distance from sensor |

| Lighting | Varied colors, lightness, or backlighting | Lighting environment that is easy to control |

| Size | Varied product sizes | Product sizes that don’t change |

| Product Color | Light or dark colors | Colors that have consistent brightness |

In summary, advanced machine vision is best when you need to know more than one piece of information, need to process more than one product, or have limited control over the image environment on your line.

When you only need to run one check, and you have good control over the image environment (i.e., part orientation, position, lighting, sensor distance, etc.), a traditional vision system can probably get the job done. However, in rapidly evolving, high-change industries, this scenario is becoming more and more difficult to find.

Take the Guesswork out of Your Line

Hyperion Automation team members, including Dana, have years of experience integrating vision systems that improve quality and the speed of processes for our clients. If you have a line that you think could benefit from advanced machine visioning, let’s talk.